In the 1820's, Charles Babbage designed the Difference Engine. It was intended to automate the computation of commonly-used functions like logarithm and cosine, which at the time were done by hand. The crank-operated machine would be initialized to perform a particular computation, the operator would crank away, and the engine would print the output on paper using movable type pieces. However, despite its limited repertoire, inherent in the design was something miraculous. It was the world's first programmable computer.

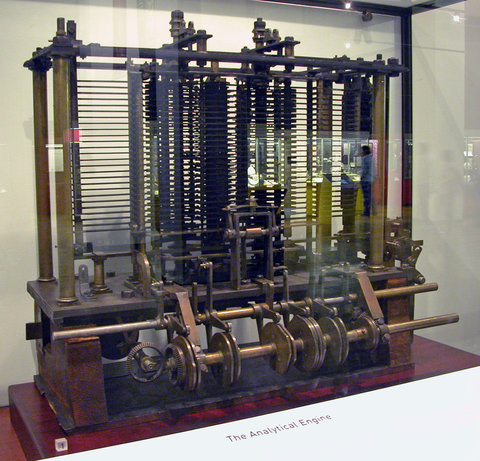

Babbage realized this. He also realized that the initialization of the engine, the programming phase, could also be automated by using something like punched cards which would set the position of the engine's cogs. These punched cards would be the world's first "programs." Babbage reasoned that if the engine could punch its own cards and select which card to read next (perhaps from a stack), it could perform any conceivable computation. He called this new design the Analytical Engine. The year was 1837.

Babbage's great leap from a single-purpose trigonometry calculator to a universal computational engine is just one example of a pattern that has repeated itself throughout history.

Take written language, for example. Early writing systems were comprised of pictographs representing physical objects: a tree, a bird, a spear. Later iterations allowed these icons, or glyphs, to be combined to produce the sound of the spoken word. For example, the word "bread" might be written using the glyphs for "Boy", "Rock", "Egg" and "Door". Today, our glyphs do not represent objects, but sounds. We can write any word as the sum of its sounds, where each sound is represented by a letter or combination of letters. It took several thousand years for our writing systems to make the jump to universality. It took Babbage around 20.

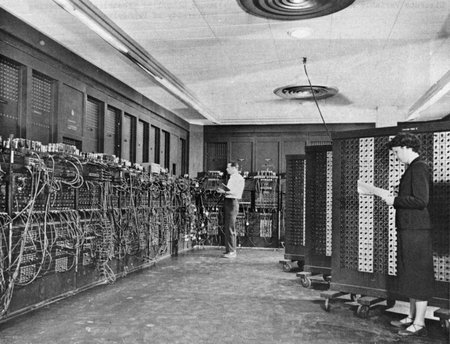

Unfortunately, the implications of Babbage's discovery were not appreciated by those who could make it ubiquitous. It would be over one hundred years until the first computers were built. These computers, the ENIAC and the Colossus, were designed during the Second World War to solve artillery-aiming equations and perform code-breaking, respectively. After the war, Colossus machines were disassembled. ENIAC went on to perform weather forecasting, and played a role in the development of the hydrogen bomb.

The pattern of implementing a solution to a specific use case before extending to universality is how many of us approach software development. An example: one hack-a-thon ago, a few of us at SinglePlatform decided to write an application to produce a digital representation of a menu from a printed copy using optical character recognition. To demonstrate proof of concept, we first focused on a specific menu. We came up with rules like "if the font size is X, it must be a heading name - if it is Y, it must be an item description" et cetera.

Rules like these are far from universal, and thus would likely not be applicable to a different menu. However, the underlying hypothesis, namely, that typographical distinctions and the physical placement of words on a page contain inherent information about their content, is indeed universal. Therefore, creating a universal "menu reading machine" is a matter of identifying the "patterns" present on that menu and deriving their meanings.

For more examples of humanity's journey to universality, check out The Beginning of Infinity by the brilliant David Deutsch.

Comments !